GitHub Actions Limitations and Gotchas

Table of Contents

Background

I’ve been leading a team at my dayjob to migrate every engineering team to GitHub Actions (GHA)

Our existing system was an in-house DSL that was on top of Jenkins. We had significant problems scaling the compute for Jenkins for various reasons, and the team couldn’t effectively support the engineering organization as we continued to grow.

While the migration to GitHub Actions is going smoothly and engineers have been far happier with GitHub Actions over our in-house system, there are quite a few issues that we ran into that I want to mention for others.

This post is to give others evaluating GitHub Actions a brief experience report.

Gotchas with Actions on GitHub Enterprise Server

For additional background, we’re using GitHub Enterprise Server (GHES). GHES is the offering that is self-hosted within your own data center. GHES is different than GitHub’s enterprise cloud (GHEC) offering. Confusing, I know.

Caching isn’t available

actions/cache@v2 doesn’t support GHES.

https://github.com/actions/cache/issues/505

Users of GHA on GHES will be using self-hosted runners. A lot of times, the self-hosted runners will be containerized. Containerized runners won’t persist dependencies, docker images, etc., between runs, so this can cause longer build times.

For example, if you’re used to having node_modules be cached between every run on your Jenkins node, that won’t be the case anymore.

GitHub Enterprise Server is behind GitHub Enterprise Cloud

The version of GitHub Enterprise Server is permanently behind GitHub Enterprise Cloud. Their SaaS offering is the most up-to-date, but this can lead to frustrations with GHES users.

GitHub released their concurrency feature to limit workflow concurrency back in April. This feature isn’t available yet at the time of writing (September 4, 2021).

Our enterprise contact said it should be ready in the next version of GHES released in September, but it can be frustrating to see features that would solve a problem you’re having and find out it’s not available in GHES.

Using Public GitHub.com Actions

If you’re considering using GitHub Actions on a GHES, you might be wondering how one gets access to the public, open-source actions on GitHub.com.

GitHub provides actions that you can include in your workflow, such as actions/checkout to check out your code. These actions on github.com fall under the actions organization, so github.com/actions. The actions that GitHub provides will be copied to your GHES instance under actions by default or any other organization name that you specify.

The issue comes when you want to use other open-source actions. These actions you will need to copy over to your GitHub Enterprise.

GitHub provides documentation to enable GitHub Connect, which allows you to connect your GHES to GitHub.com and use public actions as if they were present on your instance.

The problem is that you need a GitHub Enterprise Cloud organization to enable this. We have a license of GHES, and as far as I know, we don’t yet have GHEC. What’s strange to me is I would expect most companies that are self-hosting GHES to not use GHEC.

Their solution to this is a CLI tool to manually sync actions from GitHub.com to your instance. This solution isn’t the best but is good enough for our current use-cases.

Dockerhub pull rate limiting

Docker has been cracking on unauthenticated (free) pulls from their Docker Hub registry. For self-hosted runners, you’ll need to have a Docker mirror or authenticated pull-through cache if you expect to have many Docker pulls from Docker Hub.

General Limitations

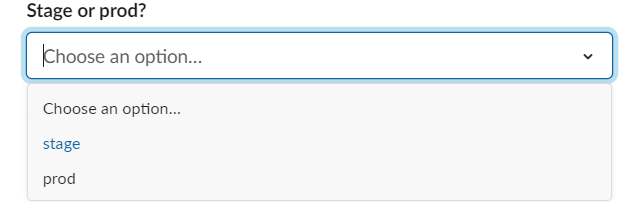

No dropdowns for manually triggered jobs

Unlike in Jenkins, you can’t provide a dropdown with some set of default options for workflow_dispatch jobs. You can see in this thread that this is a highly sought feature, and there hasn’t been movement since July 2020.

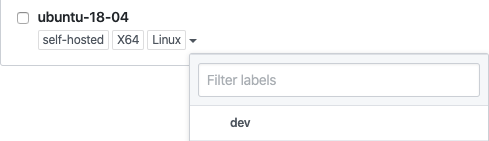

Self-hosted runner default labels

When you’re using self-hosted runners, each runner comes pre-labeled with self-hosted, the CPU architecture, and the type of OS. The default labels are frustrating because you can’t opt-out of this and has the following consequences:

If somebody does not specify a runner in their build, it could land on any self-hosted node in GHES because all of them are self-hosted. We have runners on AWS and wanted to leverage IAM roles/instance profiles instead of directly using AWS credentials. For example, a node designated as a deployer will have more access to do its job, whereas a CI node for building artifacts and running tests will have less access. The way that the runners are labeled by default means that if a user doesn’t explicitly specify they would like a CI node or a deployer node with elevated access, their build could unintentionally land on either.

You can’t restart a single job of a workflow

In GitHub Actions, the YAML files you write that contain your pipeline are called workflows. These workflows can include different steps. When your workflow inevitably fails, or you cancel it, you have to restart the entire workflow. There’s no way to continue from an individual step or restart a single job within a workflow.

Here’s an example:

You have a pipeline that fetches dependencies, builds your code, runs tests, and uploads the built artifact. Say that your workflow fails on uploading an artifact due to a flakey network connection or something. Your only choice is to restart the entire pipeline from fetching dependencies. The issue exists even if you don’t have dependencies between jobs, such as an independent job.

Slow Log Output

The interface for getting log output from runners is mostly excellent. Every step of your job has its collapsible segment. The problem is sometimes the logs come out slowly. Slow logs are exacerbated in my experience when you refresh or go back to the log page of a currently running job. Once the job is done, the logs are reliable and solid. Please note that my experience here is based on using self-hosted runners on a self-hosted GitHub Enterprise Server. I’m not sure if the experience on GitHub.com is any better with their provided runners.

You can’t have actions that call other actions

There isn’t a way to compose actions. The closest thing is to have composite actions but you cannot as of the time of writing call other actions from a composite action. It’s more challenging to create reusable composite actions and divide the ownership of composite actions amongst teams.

For instance, you might want to do some setup on the runner before deploying, such as assuming a role in AWS. If you use an underlying action that helps you assume the role, you can’t include this as part of a series of pre-deploy composite steps.

Metrics and observability

- No API to get a sense of queue size

- The GitHub Runner doesn’t stream workflow logs to stdout by default

- A lot of basic metrics aren’t exposed to operators

Workflow YAML Syntax can confusing

For the vast majority of use cases, the YAML syntax is sane and is similar to other CI systems. It gets super clumsy when you want to assign an output of a step to a variable that you can refer to later.

Also, their documentation was a little confusing to me.

Take a look at their example for an action with composite run steps:

outputs:

random-number:

description: "Random number"

value: ${{ steps.random-number-generator.outputs.random-id }}

runs:

using: "composite"

steps:

- id: random-number-generator

run: echo "::set-output name=random-id::$(echo $RANDOM)"

shell: bash

You can see that they’re using bash to generate a random number and assign it to a variable to use later in the workflow.

It’s confusing for a few reasons, especially since I came from a background of using ansible. You can’t simply assign the STDOUT or part of it to variable named random-number-generator. You must use the ::set-output name=random-id::$() command.

Another inconsistency I’ve noticed is the if conditionals. Via their documentation:

When you use expressions in an if conditional, you may omit the expression syntax (${{ }}) because GitHub automatically evaluates the if conditional as an expression. For more information about if conditionals, see “Workflow syntax for GitHub Actions.”

This slight inconsistency always makes me have to rebuild upon realizing I have to remove the expression syntax.

Closing

Overall, I very much enjoy the experience of using GitHub Actions. I think it’ll be the future standard of CI/CD platforms. Every time I use GHA I’m delighted by how fast I can create workflows to automate a manual task and find new patterns to use GitHub Actions.

I’m confident in the ability of the GitHub team to fix most of these issues in the future.

I’ve also started offering consulting services to help teams migrating to GitHub Actions.

My contact information can be found at the top and you can feel free to reach out if you have any questions/comments.

Join the 80/20 DevOps Newsletter

If you're an engineering leader or developer, you should subscribe to my 80/20 DevOps Newsletter. Give me 1 minute of your day, and I'll teach you essential DevOps skills. I cover topics like Kubernetes, AWS, Infrastructure as Code, and more.

Not sure yet? Check out the archive.

Unsubscribe at any time.